Death By AI

Short History of AI

AI stands for Artificial Intelligence. It is humans effort to create intelligence in electronic or silicon frameboards. It carries the name artificial as it is man made and it is seperate from the creations of nature. Though the history of AI is thousand years old [1], the modern AI has it roots in 20th century. In 1956, a group of scientist (which included heavy weights in the field as Marvin Minksy, John McCarthy and Claude Shannon) who conceived a new field as “artificial Intelligence.” There premise was to create thinking machines and discover the scientific basis of thought, mind and computation process of the brain. Earlier then the Darmouth meeting, was the collaborative work of Warren McCulloch (MIT Professor) and Walter Pitts, which set the basis of the neural network as we presently know of [2]. The MIT researchers were influenced by the ideas of Gottfried Leibniz, who stated that thoughts were an outcome of seperate components which were combined together to create concepts and facts and with the mixture of logical rules, all of human knowlegde can be produced. Further, McCulloch was a doctor by profession and saw the human brain as nothing different then a computing machine. As such all thoughts are computable. The neural network was an outcome of reverse-engineering the working of the brain neurons and synapses and the intriguing quality of neurons to be binary, as it sends or don’t send an electrical signal.

In 1969, the Minsky XOR problem was published [3]. It stated that a single-layer perceptron (the simplest neural network) is unable to solve the XOR problem (give true output of the two parameters of an input are similar, else it gives false output). This innocouous problem proved to be devastating to the AI field and it caused the AI- winter to set in. It took decades to realize that a multi-layer perceptron can potentially solve the Minsky’s problem. Another theorem that brought resurgence of AI was Backpropagation algorithm, which allows the neural weights to be adjusted in relation to the error that is accumulated on the predictions [4]. Faster computing power with the advent of GPU (General Processing Unit) and the emergence of large data sets has allowed the widespread adoption of neural network or machine learning technologies. In my personal experience, the advent of faster computer or online clusters and the open-source data sets has been a game-changer. A recent invention of the Transformers have become staple in order to make learning models work faster and better in all AI-applications [5].

In a nutshell, the main ingredients that are required to make the modern AI possible are: Convolutional Neural Network, Backpropagation algorithm, large datasets and GPU computing. These main components combined with open-source programming libraries and packages has made it easy to learn, built and deploy state-of-the-art AI models.

LLM

In the past five years, we have noticed the rise of LLM (Large Language Models) that are able to understand our language and create accurate responses. LLMs are a sub-field of NLP (Natural Language Processing), which is essentially the study of human language and to find computational basis for language generation. NLP falls under two classes: scientific and statistical. The scientific states that language is not only computation but there is more to the story that we currently can not explain. The unexplainable involves things as: origin of language, the mental processes, the minds contribution in language and the concious aspect. This genre of NLP is spearheaded by folks as Noam Chomsky and alike. On the other hand, we have the statistical group that states all languages are probabilistic in nature and are Turing complete (so any machine can perform the task of language acquisition as humans are able to). This group is lead by Peter Norvig and his followers [6]. So who is winning? Currently, we see the emergence of statistical models to be prevalent in llm domain. Essentially, the whole neural network can be seen as a probabilistic calculator, which categorizes and makes predictions on the given input streams.

We find that the two schools of thought in the llm field work to achieve different goals. The scientific group is to answer why questions, while the statistical group tries to answer the hows. At core, we can say that there are scientific queries and engineering possibilities. Still, the notions of Chomsky shouldn’t be ignored and must be taken under consideration if we actually want to understand what language really is. These are the points purposed by Chomsky (though they are explained in my simple language):

-

Language is a tool to create thought. Language is not designed for communication. It is a by-product of language properties.

-

Language is not only computation but has metaphysical properties that modern science, currently, can not explain. This brings in the notion of the origin of language. Many theories but nothing concrete for the time being.

-

Animals have sound. Humans are the only species that posses the power of language.

-

Computers can imitate to understand and respond to our queries, but that doesn’t mean it is a rational agent or concious.

-

The origin of language might possibly have genetic endowment or forebearing. It is in our genes to create language.

-

Language is infinite. We use a set of rules (grammar) and a set of alphabets or characters to create an endless stream of thoughts and ideas. This is known as Galileos theorem.

These are the important points that I picked from the scientific perspective. Statistical models have nothing, even remotely, to answer about such notions. As long as it works, it will be adopted. This is the philosophic outlook for the current AI researchers. This is good for engineering and market share holders but bad for scientist. As users of chatbots we must understand that the mysteries of language are still prevalent though the bots can write essays, create pdfs and create code in matter of seconds, and look and sound as if talking to a real person. Its an imitation of speech, it is not the comprehension and understanding of language. Chatbots are still similar to a smart parrot.

I do believe that current llm models will reach a plateau and will have a similar fate as the iphones have. The bots will get better with the course of time, but it will be limited in scope and not meaningful to the average user. Iphones, each year, don’t get better in any shape or form. A phone is designed to make phone calls (which surprisingly doesn’t work that well) and to send text. All the other properties are advertisments and to keep the customers engaged for as long as possible. I do think that technology does progresses but the large shifts or margin of added value for the consumer doesn’t happen annually. The previous phone from 5 years ago is still good to be used as the newest one. Same occurrnence will be seen in the llm models. For instance: GPT-3 could solve high school math test. GPT-4 can solve college level math test. GPT-5 might be able to solve International Math Olympiad etc. It is possible that we will have GPT-X that can solve prized problems of the century, such as: Riemann hypothesis, Goldbach conjecture etc. But this will be a ‘large shift’ as previously mentioned. It won’t occur in the coming decade or one after it. Such models will take more time and newer ideas will be required to make unique and original thinking bots. As long the bots are trained on the internet data, it will only know what it has been trained on. There can be no new thought without experience or originality.

AGI

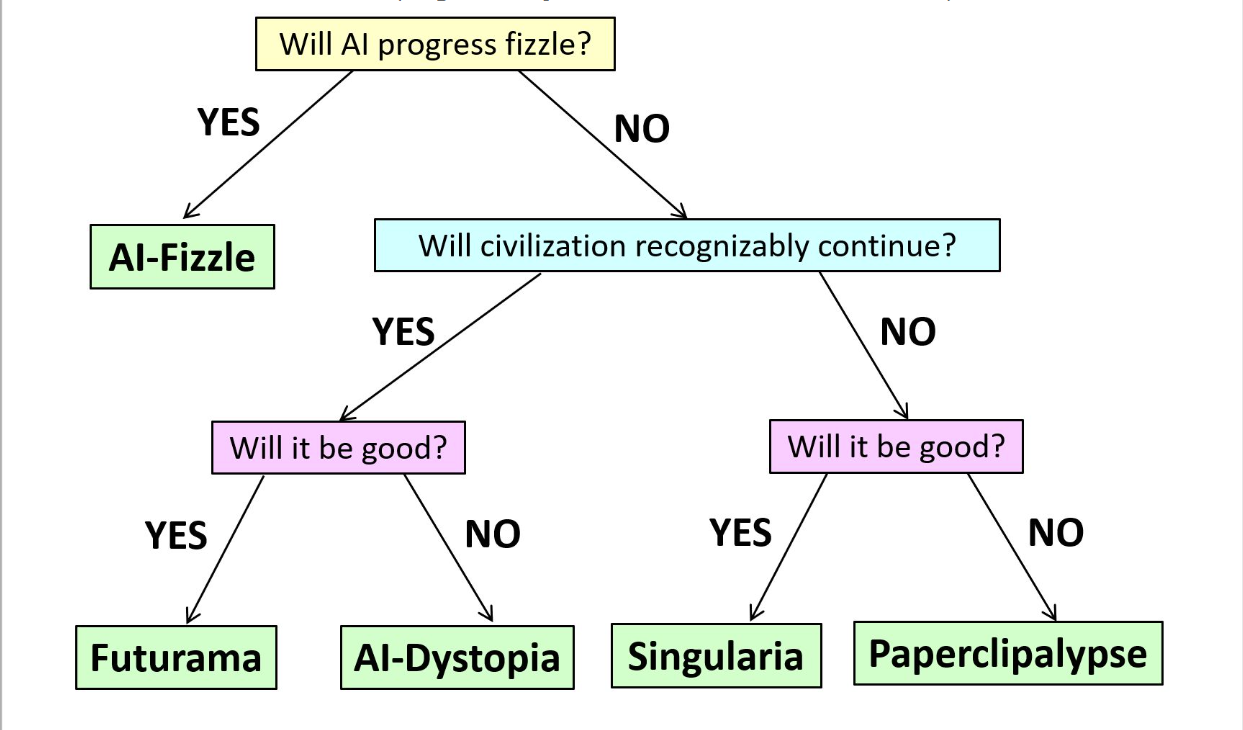

AGI (Artificial General Intelligence) is a term used for thinking machines. These machines will be capable of having original thoughts, analogous to human beings. But due to there immense memory and computing power, these machines will have the capacity of richer and profound thoughts. Still, AGI has been seen as a philosophic topic rather then actual scientific endeavour. But I will present a diagram that will make place AGI in context (as aforementioned) [8]. This is the work of Scott Aaronson (legendary computer theorist).

It states that our future can take five directions:

-

AI-fizzle: AI will at best be a consumer product. AI research will plateau and we will have AI products that will serve the modern world, such as: selfless driving, nursing robots, sophisticated large language models and privacy systems. In this situation, AI will be over-engineered but there will be no scientific breakthroughs. The future won’t look any different then the present.

-

Futurama: This is the happy path in which the AI becomes a true revolution which will help to elevate the masses. AI will work, side by side, with human specialist (in variety of domains) to curb poverty, spread of diseases and make monetary gains.

-

AI- Dystopia: This is the opposite of Futurama in which few elites rule the world through the powers endowed by AI and modern machines. In this world, the notion of big brother becomes a reality and we will create a world in which each thought and action of the citizens will be monitored and the masses will controlled with the asssitance of AI.

-

Singularia: In this world, AI will become sentient and supersede ,exponentially, humans in intelligence. Humans may become obsolete as the machines can perform all the task that commonly are done by homo sapiens. Humans may potentially integrate themselves with the machines to become superhuman. Further, AI may be seen as a demigod and be kind to humans. It will help us to make a paradise on the earth and create a timeless simulation in which all our desires and pleasures are taken care of.

-

Paperclipalyse: The AI becomes extremely powerful and chooses to be evil. In this scenario, the AI doesn’t see value in human life and see humans as a nuisance. It will work to imprison, subordinate or eradicate the human civilzation and rule the world as a singular entity.

It is more then likely that we will head towards an AI-Dystopia. As Leopold states in his interesting article, that we are heading towards the militarisation of AI-technology [7]. Just as internet and nuclear power spiraled out of the research, funding and support of US government, there is high chance that AI will follow the same course. The ruling elite and the military-complex would want to control the AI and use it as a weapon against democratic forces, the masses and other adversarial nation-states. We will see the realization of Orwell’s nightmare, the big brother marching all over the innocent people.

I hold the believe that the creation always carries the remnants of the creator. As such, AGI will be the supreme creation of humanity (if possibly conceived) and will likely be the last one. Further, there is a possibility that AGI will replicate humans dark nature, such as: racism, hate, anger, etc. It will either make decisions, indiviually, to maximize an optimal gain and this course of action may spell doom for humanity. Else it will serve the master of mankind and leave the masses to suffer. We must remain skeptic of modern technologies and critique the policies and doings of the politicians.

References

[1] Peter Norvig, Artificial Intelligence: A Modern Approach, 2004

[2] https://nautil.us/the-man-who-tried-to-redeem-the-world-with-logic-235253/

[3] Marvin Minsky and Seymour A. Papert, Perceptrons: An Introduction to Computational Geometry, 1969

[4] Paul J. Werbos, The Roots of Backpropagation : From Ordered Derivatives to Neural Networks and Political Forecasting, 1994

[5] Ashish Vaswani, Attention Is All You Need, 2017

[6] https://norvig.com/chomsky.html

[7] Leopold Aschenbrenner, Situational Awareness: The Decade Ahead, 2023

[8] https://scottaaronson.blog/?p=7266